Bridging the Safety Gap for Machine Learning Models in Autonomous Surface Vehicles

Autonomous Surface Vehicles (ASVs) are revolutionizing maritime transportation, driven by advancements in Artificial Intelligence (AI), particularly Machine Learning (ML). However, ensuring the safety of these systems in unpredictable, real-world conditions remains a significant challenge.

Existing safety assurance methods, such as AMLAS (Assurance of Machine Learning for use in Autonomous Systems), provide structured stages to design safe ML models [1]. While AMLAS is invaluable in the development phase, it has limited provisions for handling real-world operational challenges, such as data shift, model uncertainty, data corruption, and data novelties.

To provide a complete safety framework for ML models, it’s crucial to align with established software safety standards. ML models are part of larger systems, and their assurance must integrate into the entire system’s safety lifecycle. Our methodology builds on AMLAS by incorporating the IEC 61508 functional safety lifecycle, a widely used standard for the functional safety of electrical, electronic, and programmable systems. This lifecycle can be further adapted for ML-specific contexts, as outlined in IEC/ISO TR 5469, a technical report on functional safety and AI systems.

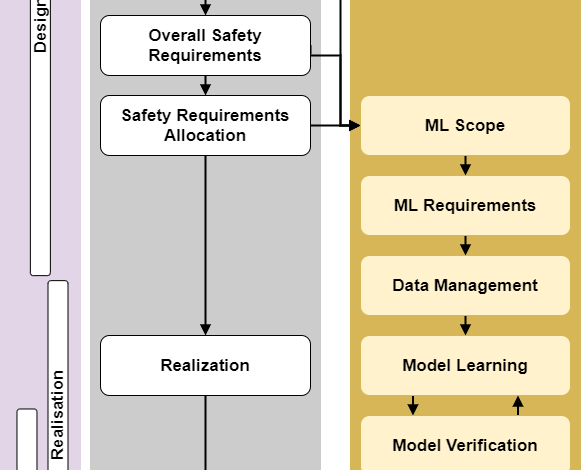

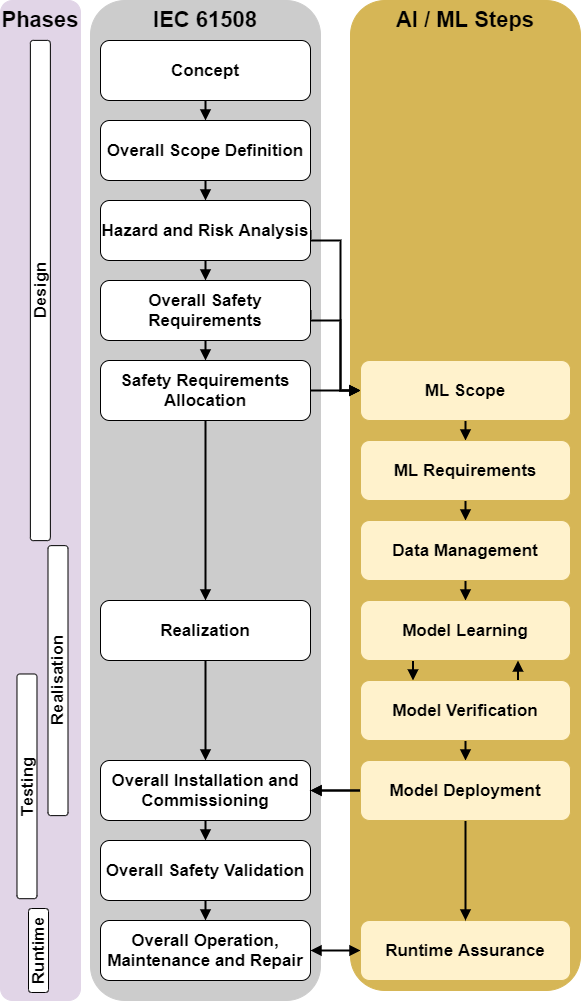

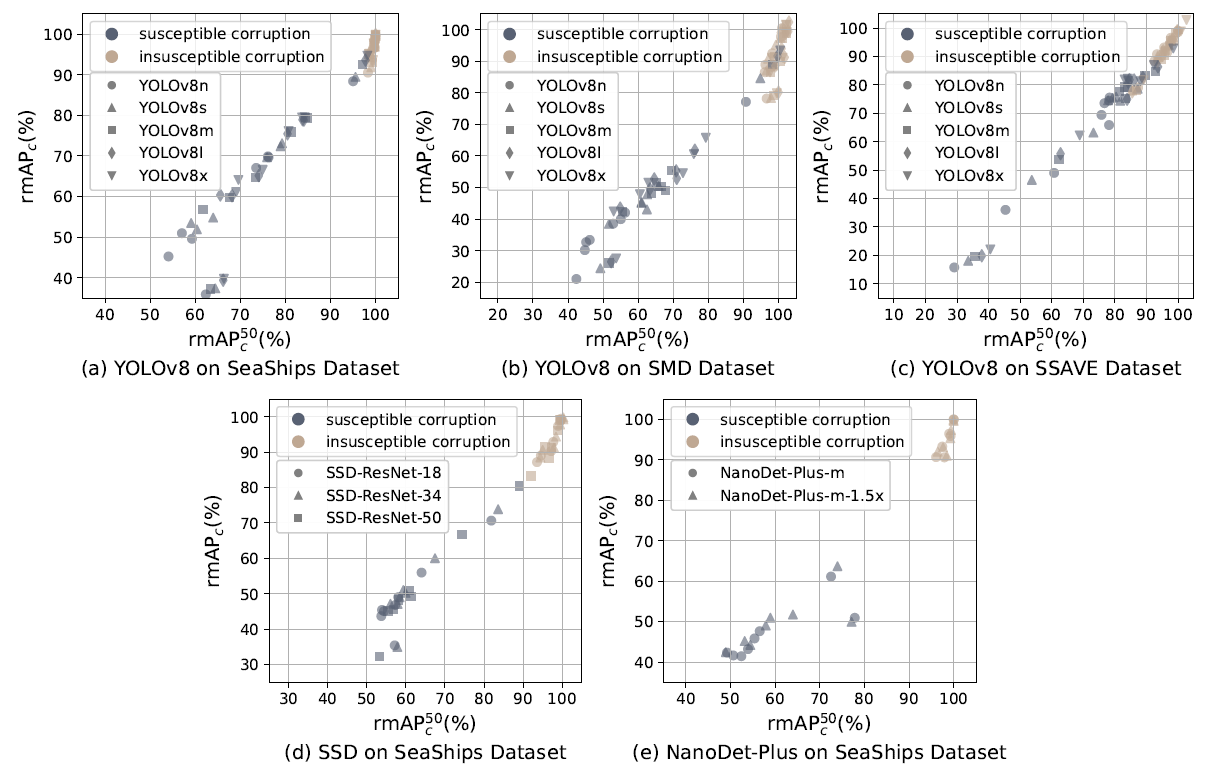

IEC 61508 outlines 16 key safety assurance steps across a product’s lifecycle, grouped into three phases: Analysis, realization and testing, and operation. Our assurance framework introduces an ML-specific safety assurance cycle, closely aligned with a simplified IEC 61508 lifecycle, as depicted in Figure 1. This framework can be used for ML models used in ASVs. For example, the methods in our previous paper [2] can serve for model verification (results shown in Figure 2) in this framework to ensure the safety of object detection models used in ASVs together with other stages.

Figure 1. The alignment of safety framework for ML models with IEC 61058 lifecycle.

Figure 2. Corruption robustness evaluation results for real-time object detection models across 3 waterborne datasets (SeaShips, SMD, SSAVE) under 15 corruptions.

The 15 corruptions are further categorized as susceptible or insusceptible corruptions based on model performance under corruption. The x-axis and y-axis are two metrics to indicate model robustness. The higher the metrics are, the more model performance is preserved under a corruption type.

For the runtime assurance included in this lifecycle, seven stages are proposed, enabled by deploying safety monitors following the stage-by-stage guidelines. These stages ensure that ML systems operate reliably even in dynamic and uncertain environments. In the runtime, the developed monitors continuously observe the system and its environment, detecting operation states of the running ML model. When issues arise, they trigger interventions to prevent failures, ensuring safe and reliable operation.

By developing this framework to complement AMLAS in the complete ML lifecycle, we provide a comprehensive approach to ensure ML safety across both design and operational phases. This can further facilitate the responsible and safe adoption of ML models in ASVs. Further details will be made available following the publication of our paper.

An article by Yunjia Wang.

References

[1] Hawkins, Richard, et al. “Guidance on the assurance of machine learning in autonomous systems (AMLAS).” arXiv preprint arXiv:2102.01564 (2021).

[2] Wang, Yunjia, et al. “Navigating the Waters of Object Detection: Evaluating the Robustness of Real-time Object Detection Models for Autonomous Surface Vehicles.” 2024 IEEE Conference on Artificial Intelligence (CAI). IEEE, 2024.