Evaluating Object Detection Model Robustness in Autonomous Surface Vehicles (ASVs)

The crux of Autonomous Surface Vehicles (ASVs) safety and intelligence revolves around accurate and efficient vision-based object detection. However, the vision-based deep learning models central to ASV intelligence are also known for their susceptibility to various forms of corruption. Ensuring the safety of ASVs necessitates a deep dive into object detection robustness under diverse digital and environmental corruption types. Our research conduct a comprehensive evaluation of leading real-time object detection approaches—YOLOv8, SSD, and NanoDet-Plus—in the context of ASV object detection.

In the era of rapid advancements in artificial intelligence and communication technologies, the development of Autonomous Surface Vehicles (ASVs) has emerged as a focal point of interest. The European Waterborne Technologies Platform (Waterborne TP) initially conceptualized ASVs, setting the stage for an array of unmanned and autonomous vessel projects that followed. Pioneering research, such as the Maritime Unmanned Navigation through Intelligence in Networks (MUNIN) project, has offered optimism, suggesting that fully autonomous vessels are on the horizon, albeit with certain constraints. Noteworthy ASV prototypes, such as the ReVolt developed by DNV GL, and initiatives like the Advanced Autonomous Waterborne Applications Initiative (AAWA), have solidified the path towards autonomous shipping. An example of this progress is YARA Birkeland, the world’s first fully electric autonomous container feeder, which finished its design in 2017, guided by the lessons of MUNIN.

The crux of ASV safety and intelligence revolves around accurate and efficient vision-based object detection. While sensors like LiDAR, radar, and sonar enhance situational awareness, visual cameras excel in object classification and identification, thanks to their rich high-resolution image data. Object detection serves as the bedrock for critical ASV tasks such as object tracking and path planning. Deep learning algorithms for object detection primarily fall into two categories: two-phase and single-stage approaches. The former generates region proposals before classifying each proposal as an object or background (e.g., Region-Based Convolutional Neural Network or R-CNN). In contrast, single-stage approaches bypass explicit region proposal steps, directly predicting object class and location in one pass (e.g., You Only Look Once or YOLO [6], Single Shot Multibox Detector (SSD), and NanoDet). With generally higher speed, the single-stage approaches are usually used for real-time object detection applications such as ASVs.

However, the vision-based deep learning models central to ASV intelligence are also known for their susceptibility to various forms of corruption. While the literature extensively explores adversarial perturbation robustness, our research focuses on corruption robustness, particularly within the context of real-time object detection for ASVs. Object detection models often confront corruption types such as noise, blur, illumination variations, and adverse weather conditions. Identifying the critical corruption types that pose challenges to ASVs, and understanding how they vary across different detection approaches and waterway surface data, presents a formidable task.

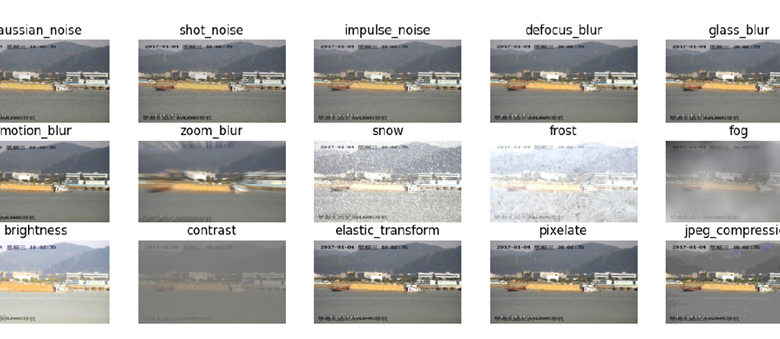

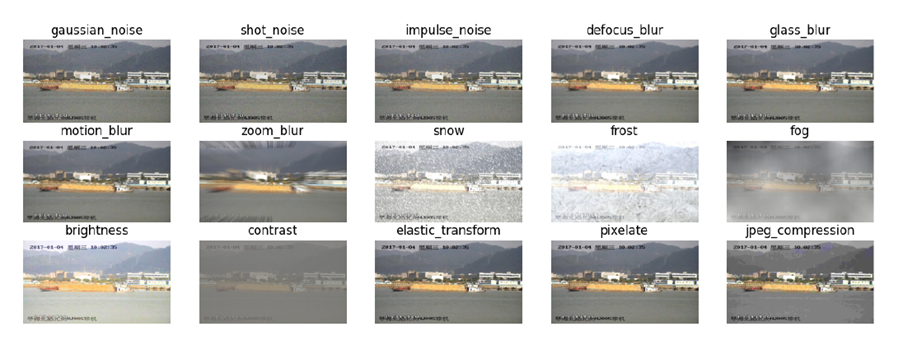

Figure1: An example image under 15 types of corruption at severity 3.

The original image before adding corruption is from SeaShips dataset.

Failures in object detection have significant ramifications, potentially leading to collisions and unacceptable hazards for ASVs. Ensuring the safety of ASVs necessitates a deep dive into object detection robustness under diverse digital and environmental corruption types. While some studies propose strategies to enhance model performance under various weather conditions and blurring (e.g., data augmentation techniques or refined network architectures), a comprehensive evaluation of corruption robustness in the context of ASV object detection is conspicuously absent from existing research.

Our research conducts a comprehensive evaluation of leading real-time object detection approaches—YOLOv8, SSD, and NanoDet-Plus—in the context of ASV object detection. We traverse different model scales, scrutinizing model performance on both pristine and corrupted datasets, while also introducing metrics for evaluating robustness. Our findings not only underscore the susceptibility of these models to specific image corruption types but also highlight their resilience to others. Particularly, we draw attention to corruption types associated with adverse weather or low-lighting conditions, which raise critical concerns within the autonomous shipping domain.

Moreover, a recurring trend emerges throughout our investigation: larger deep learning models tend to exhibit heightened robustness against susceptible corruption types. This trend, consistently observed across multiple datasets and employing various approaches, underscores the role of model scale in influencing corruption robustness. Nevertheless, the underlying mechanisms driving this trend remain an intriguing enigma, leaving the door ajar for future research.

An article by Yunjia Wang